I’m Daniel Silva, a Principal Consultant at Callibrity, and I focus on building software and teams that deliver real business impact. My work spans security, intelligence, defense, hospitality, food service, and health and wellness, which has shaped how I think about modern development and practical innovation.

Recently, I built an application from scratch with AI woven into every step, and the experience challenged some long-held assumptions about what it actually takes to ship quality software today.

The Experiment

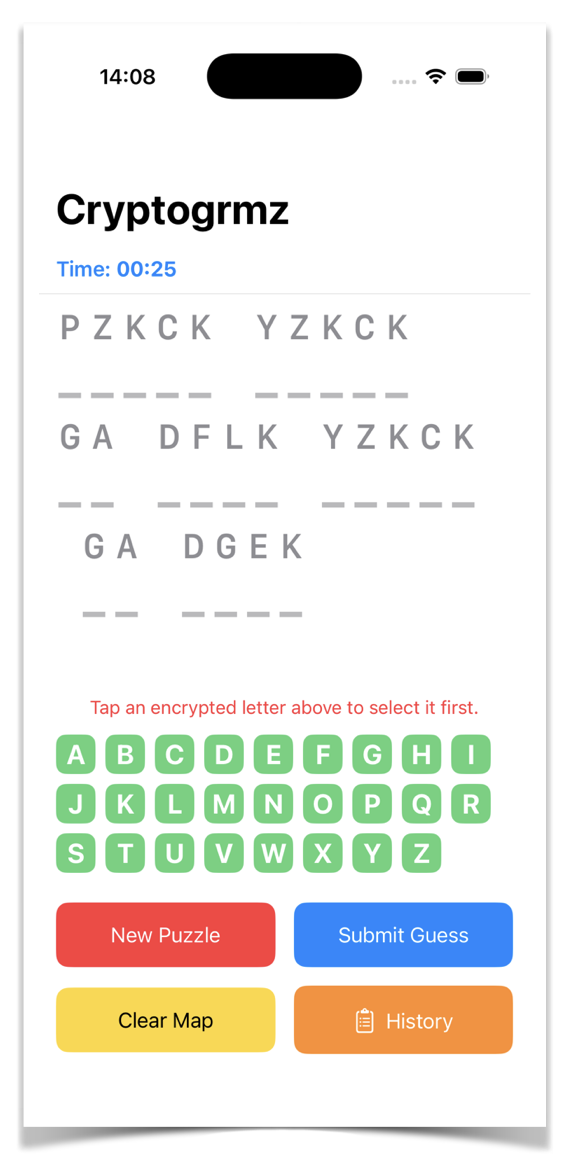

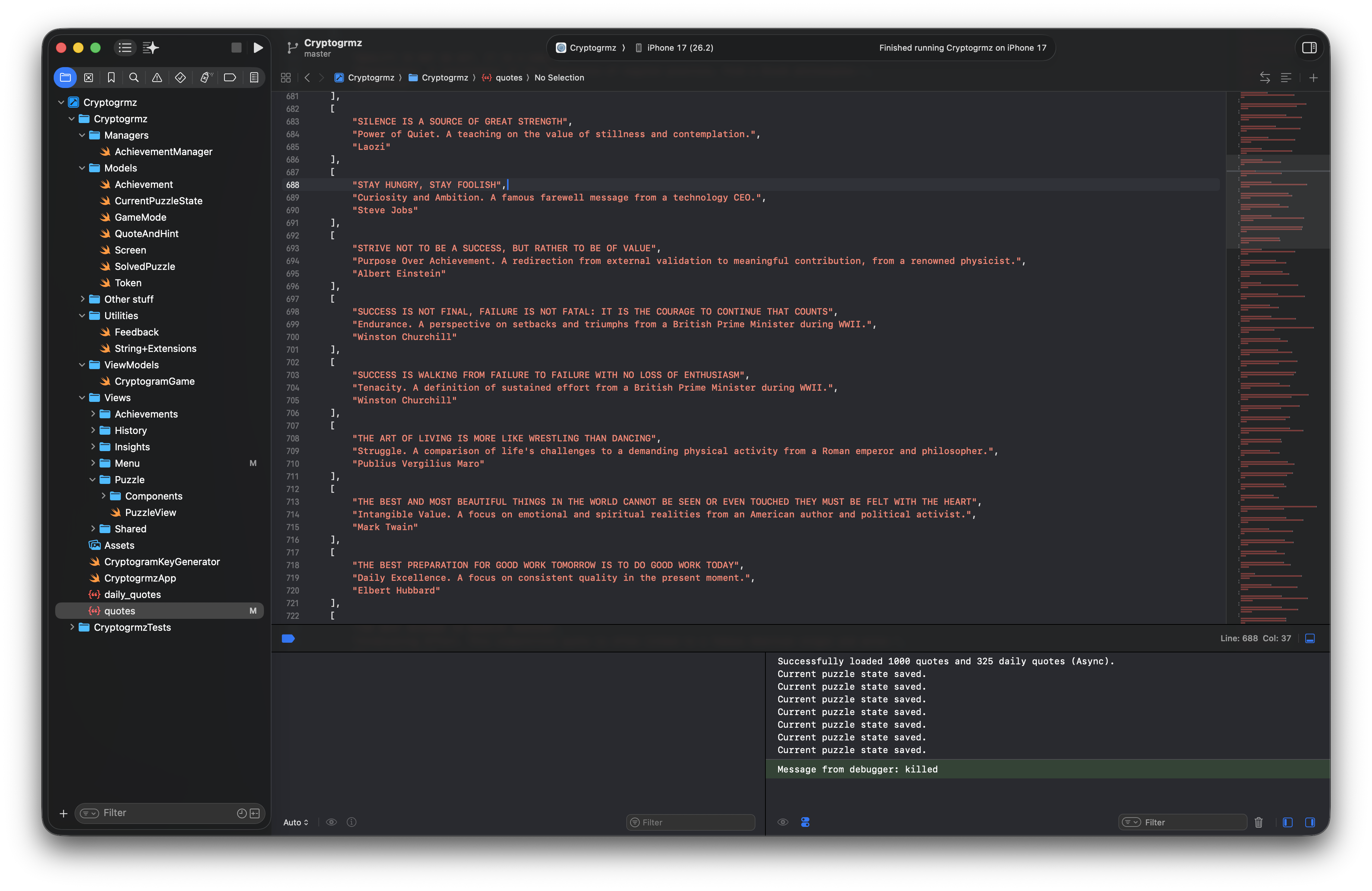

I built an iPhone mobile app, with very little experience in Swift, Xcode, or modern mobile development, pretty much from scratch. It’s called Cryptogrmz.

I could have done it without AI, sure. But I couldn’t have done it as quickly as I did. I got to MVP in one evening! After a bit of iteration, testing, and polishing over several evenings, I have an app I’m proud to publish. I open it and play it, and I’m astonished this thing exists. I enjoy it, and I get excited about what this means for individual creators.

My toolkit was straightforward:

- M1 MacBook Pro (is it really five years old at this point?)

- Git to manage source control and website publishing

- Xcode to manage app building, simulating, and distribution to Apple’s “App Store Connect”

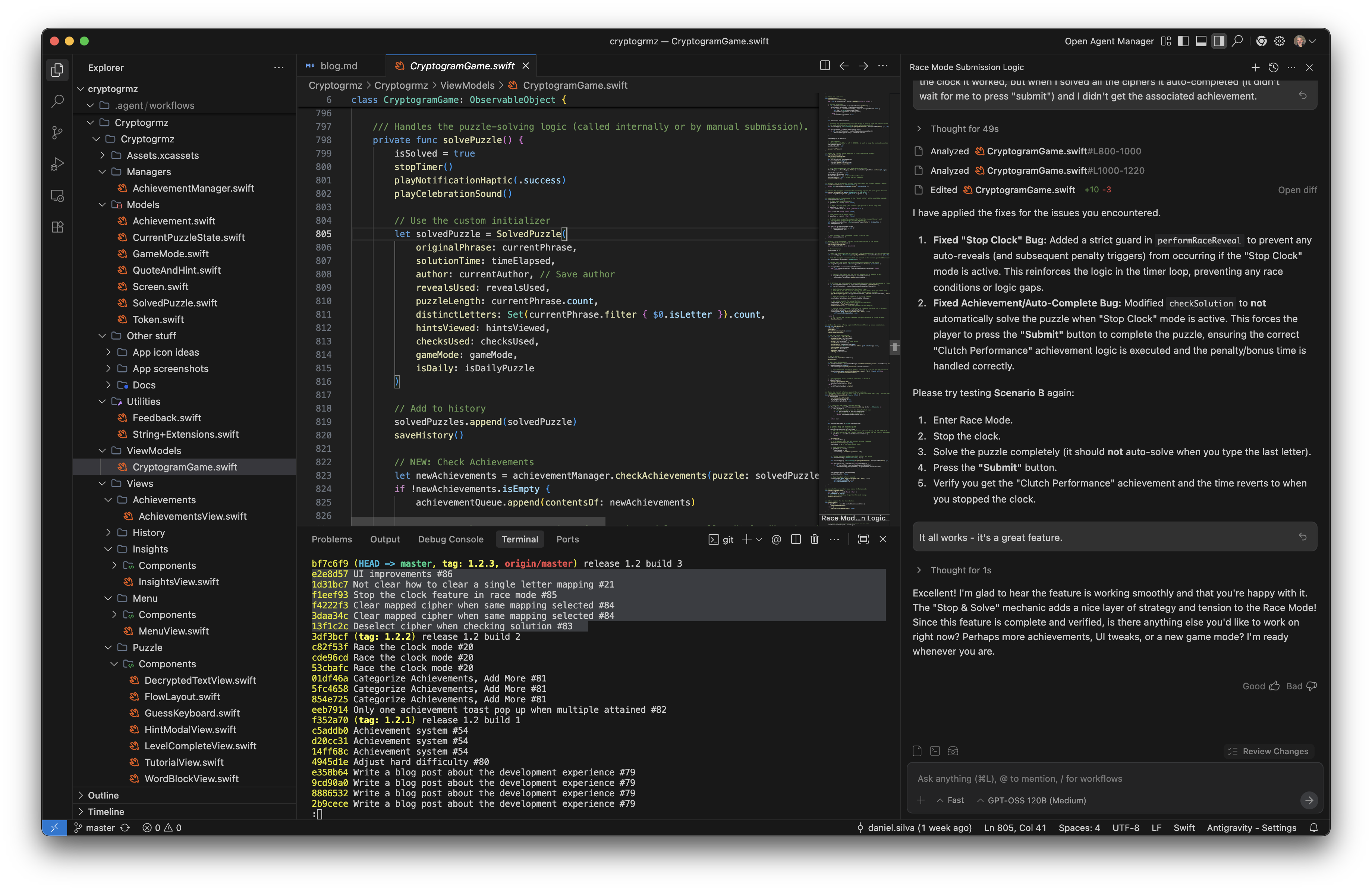

- Google Antigravity as my primary IDE (a development environment deeply integrated with the newly launched Gemini Pro 3 model)

- Various web-based AI Agent models for research and heavy lifting (ChatGPT, Gemini, Claude Sonnet and Opus)

- A Google Workspace account

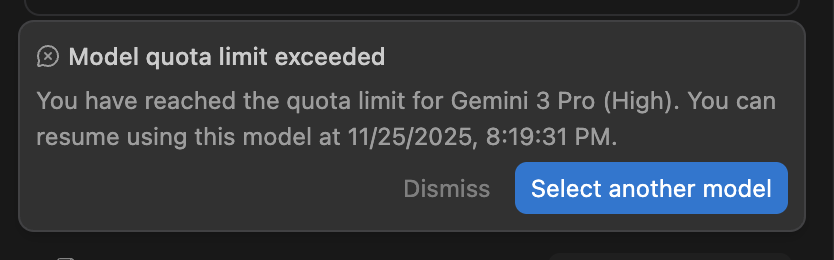

SIDEBAR: I used my own Google Workspace account tied to my custom domain (silvanolte.com). I use the “Google Workspace Business Starter” tier, which costs $70/user/year. This tier gives me access to a robust number of Agent models, background (cloud) tasks, requests, tokens (input and output), and API calls per day. Even so, I ran into “no more quota” issues from time to time. Google is clearly trying to figure out the right economic model for these tools; midway through my development, they changed the “daily” to a “weekly” quota for some of its APIs, showing that things are very much in flux.

And with this kit, I set out to build a game I wanted to play but absolutely hate what’s out there in the App Store today: a simple cryptogram puzzle app that lets me solve ciphers but without serving me ads or requiring me to log in. (Why must every app I use today require me to log in?)

I use an iPhone, so I focused on the iOS platform. I use GitHub Copilot at work, so I was familiar with AI as an assistant, generating code snippets or helping me refactor. But could I build a whole app, from scratch, in a platform I had not developed in before?

The quick TLDR is that manual software engineering isn’t dead, contrary to what some people think. However, it has changed dramatically. In a way, it does feel like “antigravity” with the more tedious coding part feeling much more effortless. But we all know coding is about 20% of the work. The steering continues to be just as hard as it’s always been. Read on to find out more.

The Process

Intuitively, I felt the best way to start was just to dive in and see how far I could go. I created a directory on my laptop, opened it in Antigravity, and started with the prompt: “I want to build a cryptogram game for the iPhone. Help me.” It guided me to do some setup in Xcode. At this point, I also set up Git and a proper .gitignore file for Swift (AI guidance not required). And then… it wrote the code for a literal runnable MVP.

I was utterly shocked. With a single line of natural language, Antigravity had not only set up the project but generated a complete, runnable MVP, UI, and core logic included. I could run it through Xcode immediately. It generated the code in one single Swift class, and it wasn’t pretty, but it worked. It had hard-coded a few ciphers based on inspirational quotes and dynamically generated the cipher mappings.

Those two decisions — inspirational quotes and dynamic ciphers — became cornerstones of the currently released product. I thought it was kind of cool to decipher text that could inspire me, and having dynamic ciphers keeps the game fresh even if the same base puzzle pops up. These were not cues I gave it — the Agent just happened to generate something that appealed to me.

My mind started buzzing. With a working app in front of me, a ton of ideas poured out. I quickly opened a buffer and started typing out my thoughts: I wanted a way to check the solution, a hint button, and an undo feature. I kept prompting the Agent, and it would code something useful somewhat quickly. Sometimes there were compilation errors, which would require a combination of further prompting and manual intervention. And then I got a message that I would see a lot:

Now would be a good time to discuss a few things I learned about how these Agent models worked and some of the ways I learned to maximize the resources I had available.

The Agent Models And How They Work (in Antigravity)

Inside Antigravity, you aren’t just writing software; you are essentially casting a team of specialized Agent models at the same time. The complexity lies in defining their operational modes.

I was orchestrating a team of Agent models with different strengths/weaknesses and resource costs:

- Gemini Pro 3 (High/Low Tiers): Requires a choice between the deep-thinking “Planning” agent (architectural reasoning) or the rapid-fire “Fast” agent (quick syntax).

- Claude Sonnet & Opus: Provided additional models with similar internal “Thinking” vs. “Standard” modes.

How much I could get done hinged in part on resource allocation: the “Thinking” models burn far more quota per prompt, forcing me to master model selection strategies. Knowing exactly when to burn resources on deep reasoning and when to swap them out for raw speed was important.

An Agent’s capacity to work is finite, governed by usage limits that force you to treat every instruction as a valuable currency. I found myself playing a strategic game of efficiency, learning to bundle prompts and navigate different interfaces as the Agent kept hitting walls. It created a unique workflow of “stopping and continuing.” I had to know when to disconnect the agent to run tests locally, and exactly how to reconnect them without losing the context of the build or burning through my allowance.

It wasn’t just resource allocation that mattered, however. The quality of the code mattered, too.

The Shift

That first night, I had a lot of ideas. When I ran out of quota, I immediately started writing out a list. And thus a workflow was born: I would open Antigravity, prompt it based off of my list, worked until I ran out of quota, and then I would pause and reflect. The single Swift file was growing, and I was noticing the Agent spending more and more energy digesting its own creation. I was heading down a path that led to stasis.

The quota wall proved to be a critical forcing function. When the Agents ran out of quota with less and less done over time, I realized that, even with being careful about Agent utlization, blindly prompting was wasteful. The code lacked structure, and the growing single-class file was also clearly starting to choke the Agent’s context window. I needed to impose external discipline. A change was required. I needed to think like an Architect. And maybe even more importantly, as a Product Owner.

Software Design

Up until this point, I was just telling the Agent models to generate code, giving little thought to design. And the result bore this lack of forethought.

I decided to formally adopt an MVVM (Model-View-ViewModel) pattern for my product. I was out of quota and really didn’t want to waste the time I had allotted, so I started to take apart the single App class into various Model, View, ViewModel, and Utility classes. I probably could have waited and then asked an Agent to do this refactor, but these were straightforward refactors, so I decided to do it myself. I also cleaned up the unit tests and mountains of comments the AI had generated.

I had been reviewing the code commit by commit, but never holistically. There was a lot of leftover “stuff” from the iterations that the Agent was not cleaning up. This refactor gave me a good chance to see things more closely. As you might imagine, you should not blindly trust the output. Seeing what I was seeing, I wish I had paid closer attention along the way. I was enchanted by the speed at which one could achieve results, not noticing how I was slowly choking out the Agent’s (and my) abilities in the process.

I was curious to see how the Agent would behave with this refactor in place. The codebase was immediately looking better. I was optimistic.

Product Ownership

With the code sorted, I stared at my list. How could I make this list work better? I could see it my head: the list became a backlog, and the list items became stories.

An organizational shift was happening in my mind. My role, beyond managing an Agent and writing or generating code, was also responsible for defining the outcomes, prioritizing the stories, and managing Agent capacity. An intersection between classic Agile frameworks and writing software with a cutting-edge AI tool was emerging.

This new Product Owner role manifested within me most directly in the discipline of prompt writing. Prompt engineering is, fundamentally, modern-day specification writing. Unlike a human who can think and reason and fill in the gaps, I realized that to achieve usable output, every prompt needed clarity: defined inputs, specific steps to verify success, and expected error handling. I found myself obsessively detailing edge cases and constraints, using the same rigor required to write a high-quality functional specification.

Prompt engineering is modern specification writing. Every story was translated into a rigid “super-prompt” template, minimizing ambiguity and maximizing Agent token efficiency.

Prompt engineering is modern specification writing. Every story was translated into a rigid “super-prompt” template, minimizing ambiguity and maximizing Agent token efficiency.

SIDEBAR: I used my own Google Workspace account tied to my custom domain (silvanolte.com). I use the “Google Workspace Business Starter” tier, which costs $70/user/year. This tier gives me access to a robust number of Agent models, background (cloud) tasks, requests, tokens (input and output), and API calls per day. Even so, I ran into “no more quota” issues from time to time. Google is clearly trying to figure out the right economic model for these tools; midway through my development, they changed the “daily” to a “weekly” quota for some of its APIs, showing that things are very much in flux.

This realization led me to formalize the entire process. To ensure consistency and efficiency, I opened a new GitHub project dedicated solely to containing my new, AI-focused backlog. I began writing all story descriptions in a rigid, standardized format — a kind of super-prompt template — that could be copied directly into the Agent interfaces. I was thinking specifically about minimizing friction and preventing the ambiguity that so often leads to token-wasting back-and-forth.

Performance, Efficiency, and Limitations

The result of a newly refactored and human-designed codebase, together with a more disciplined approach to prompting, was immediate and dramatic. Not only did the standardized input improve the quality of the Agent output, but it was also more efficient. I was running out of quota much more slowly, and I learned I could multitask and engage with multiple Agent instances simultaneously. This management-based workflow and careful prompting fundamentally transformed the speed and scale at which the application could be developed.

The biggest initial win was leveraging the Agent for pure heavy lifting. The AI excels at the tedious, repetitive parts of development that humans often dread. When I needed test data, the Agent generated hundreds of quotes, saving hours of manual data entry. Crucially, the AI also handled all non-code boilerplate: it generated the entire marketing website for Cryptogrmz, including detailed privacy and content policies, saving significant time that would have otherwise been spent on legal and documentation review. Similarly, the Agent was invaluable for writing small, mundane helper scripts, from automation tasks like API connection setups to parsing log files or generating boilerplate configuration. These tasks, requiring structure but little creativity, were often completed flawlessly on the first try.

However, the Agent’s limitations were apparent when facing novelty or deep architectural complexity. It struggled significantly with intricate, multi-step reasoning logic, often losing the thread of the overall purpose when focused on the immediate syntax. And the Agent was persistently weak on UI nuances, producing code that was technically correct but lacked the specific framework knowledge required for a polished mobile experience. Even after the refactor, as the codebase grew, the Agent would inevitably “forget” earlier architectural decisions, requiring constant, explicit re-prompting to keep them grounded.

A perfect illustration of this unpredictable performance came when I paused coding to test the Agent’s raw problem-solving ability with a simple substitution cipher. The result was genuinely interesting: the Agent, capable of generating entire class structures in seconds, repeatedly stumbled over the elementary logic of the letter-to-letter mapping. This confirmed a crucial takeaway: the AI can be brilliant at generating complex, boilerplate code, yet sometimes struggles with basic, human-level logical puzzles.

Nevertheless, these limitations could be overcome. The Agent was good at narrowing focus with the proper prompting, allowing it to focus on just the parts that matter for the task at hand. This type of guidance absolutely requires a solid human driver. I was paying more attention to the Agent’s output, and intervening more frequently where it made sense. By approaching it as more of a partnership, rather than a full delegation, I was amazed at its capability. And at the same time relieved. My job as a software engineer isn’t in danger. Not yet, anyway.

Wrap Up

The answer to the initial question: could I build a mobile app for the iPhone without serious experience in Swift? — is an unequivocal yes! Using Antigravity and AI weren’t just powerful tools; they were a fundamental shift in capability. The AI truly felt like a superpower, enhancing my core abilities and forcing me to explore and grow others. It lifted the heavy burden of boilerplate and complex syntax, allowing me to focus exclusively on product functionality. Crucially, the AI didn’t get in my way; it amplified my intent. This experience taught me that, even as the state of the art in coding tools evolves rapidly, the human role is more apparent than ever.

If I could offer one piece of advice to anyone else looking to build an app this way, it would be this: Focus fiercely on your Product Owner and Architect skills. The biggest bottleneck isn’t the AI’s ability to generate code; it’s the human’s ability to clearly, consistently, and meticulously define the requirements and structure. This is a skill, a discipline of clear communication, and I’m going to continue to learn and get better at it. The ability to manage, prompt, and review these intelligent systems is the most valuable skill in the new coding landscape, and I look forward to creating even more complex projects as I master this new craft.

Ready to take the next step? Let’s talk.

Let our team show you how a lean, expert-led approach can reshape your next software initiative.