Journey into Protobuf

A journey into Protobuf. What is it, why use it and some examples.

What is Protobuf

Protobuf (a.k.a Protocol Buffers) is a serialization mechanism developed by Google a few years ago to deal with an index server request/response protocol. And now, protocol buffers become Google’s lingua for data, currently there are 48,162 different message types defined in 12,183

.protofiles. They are used in a variety of RPC and storage systems internally in google. [1]

Why use Protobuf

Originally, google designed protobuf with the aim of providing a better way to transmit data among their systems, compared to XML approach which ends up with larger size, slower speed and complicated usage. But, surprisingly, as you will see later in this blog, protobuf even outperforms JSON.

Install Protobuf Compiler

- Mac:

brew install protobuf

Description of Example Protobuf Message

There are 3 protobuf message types used later in this blog, and they are ArticleCollection, Article and Author.

ArticleCollection is very simple which only contains a serials of articles. Article message type contains the following data definitions:

- id - integer

- title - string

- snippet - string

- content - string

- isFeatured - boolean

- topic - enum

- author - another protobuf message

The Author message type has the following data definitions:

- id - integer

- name - string

- email - string

Define protobuf messages

To create the protobuf messages mentioned above, you define your message types associated with the messages you want to serialized/deserialized in .proto files to structure the information you want to serialize. Each protobuf message is a small chunk of structured information, containing name-value pairs. In the terms of protobuf syntax, structured data is called Message, the following message definition reflects the the structured data in our example:

1.article_collection.proto file

syntax = "proto3";

package tutorial;

message Article {

int32 id = 1;

string title = 3;

string snippet = 4;

string content = 5;

bool isFeatured = 6;

enum Topic {

SCIENCE = 0;

TECHNOLOGY = 1;

NATURE = 2;

ENTERTAINMENT = 3;

POLITICS = 4;

}

message Author {

int32 id = 1;

string name = 2;

string email = 3;

}

Author author = 7;

repeated Topic topic = 8;

}

message ArticleCollection {

repeated Article article = 1;

}

The message format & definition is simple, each message type is composed of one or more data fields numbered uniquely, and each field is a name value pair, where value can be number (integers/floating-point), boolean, string, raw bytes or even other protobuf message types (seen above), which allows you to structure your data in a more clear & clean way. Those unique numbers are used for message data encoding purpose, you can find out more about how protobuf message is encoded in Encoding page.

Description of example programs

2 programs were developed for this blog. Both are available in this github repository.

The first program is very simple which composed of two tasks - Reader and Writer. The writer is responsible for serializing message data and write data to disk. The reader will deserialize the message stored on dist and read data back into program to display.

The second program is a web app composed of a react frontend and a dummy backend web service api. The frontend is mainly consuming and displaying data requested from the web service.

Compile .proto file

Once message is defined, you run the protobuf compiler on the .proto file and specify the programming language you want to use for your protobuf message to generate corresponding data access classes. You can find corresponding getters & setters for each field defined in the .proto file (e.g. in this example, name() and set_name()), as well as methods to serialize/deserialize message data.

- In the first example program, C++ is used, in order to get the corresponding classes, running the protobuf compiler

protoc -I=$SRC_DIR --cpp_out=$DEST_DIR $SRC/article_collection.protowill generate two files:article_collection.ph.harticle_collection.ph.cc

In the C++ header file, you can see the class definition for all of the message types specified in the

.protofile:AuthorArticleArticleCollection

Then you can use one of these classes to deserialize/serialize, e.g. read and populate

Authormessage:To write message to disk file

tutorial::Article::Author *author = new tutorial::Article::Author(); author->set_id(1111); author->set_name("Yan"); author->set_email("yan@example.com"); std::fstream fout("example_file", std::ios::out | std::ios::binary); author->SerializeToOstream(&fout);To read message back from file:

fstream fin("myfile", std::ios::in | std::ios::binary); Author author; author.ParseFromIstream(&fin); std::cout << "Name: " << author.name() << std::endl << "E-mail: " << author.email() << std::endl; - In the second program, the web service is written in python, to get the python version of data classes, you only need to change the

--cpp_outoption in the compiler command above to--python_out. And for the react frontend, we will be using a js package calledprotobufjswhich will be explained later.

Protobuf has a awesome feature that it supports backwards-compatiblity, so developers can keep adding new fields to existing message definitions, the old binaries will just ignore the new fields when parsing the new serialized/deserialized fields when parsing.

You can find more detailed reference for using auto generated protobuf code in the API page.

Code the reader and writer

As stated before, writer will write the structured data to disk so that other programs can read it later. If we don’t use protobuf, there are also other choices for us. One possible solution is to convert data into pure string, and write the string data to disk. This is simple, e.g. id number 111011 could become string “111011”.

There is nothing wrong for using this solution, but if we think more, then there is a problem that this solution will complicate the task for developer writing reader and writer. E.g. the id number 111011 can be a single number string 111011, but can also be a string with delimiter 1|1|1|0|1|1 etc. If so, the writer program has to define a rule of using delimiters, then reader program has to deal with different delimiters in the encoded message, which could leads to other potential problems. At last, we will find that a simple program has to write many additional code to deal with message format.

But if we use protobuf, reader and writer won’t consider the message encoding format problem, the message data is described in the definition file, the compiler will generate access classes for us, reader an writer can simply use the classes generated to accomplish the task. The generated classes have a serials of methods to read and modify the data in the structured message. The serialization/deserialization process is handled entirely by the code generated by protobuf compiler, which is very robust.

The following code demonstrate the reader and writer tasks:

#include <iostream>

#include <fstream>

#include <string>

#include "article_collection.pb.h"

using namespace std;

void PromptForArticle(tutorial::Article* article) {

cout << "Enter article ID number: ";

int id;

cin >> id;

article->set_id(id);

cin.ignore(256, '\n');

cout << "Enter article title: ";

getline(cin, *article->mutable_title());

cout << "Enter snippet: ";

getline(cin, *article->mutable_snippet());

cout << "Enter content: ";

getline(cin, *article->mutable_content());

cout << "Is this article featured? (yes/no): ";

string answer;

cin >> answer;

if (answer == "yes") {

article->set_isfeatured(true);

} else {

article->set_isfeatured(false);

}

tutorial::Article::Author *author = new tutorial::Article::Author();

cout << "Enter author id: ";

cin >> id;

author->set_id(id);

cin.ignore(256, '\n');

cout << "Enter author name: ";

getline(cin, *author->mutable_name());

cout << "Enter author email: ";

getline(cin, *author->mutable_email());

article->set_allocated_author(author);

while (true) {

cout << "Enter a topic (Science/Technology/Nature/Entertainment/Politics): ";

string topic;

getline(cin, topic);

if (topic.empty()) {

break;

}

if (topic == "Science") {

article->add_topic(tutorial::Article::SCIENCE);

} else if (topic == "Technology") {

article->add_topic(tutorial::Article::TECHNOLOGY);

} else if (topic == "Nature") {

article->add_topic(tutorial::Article::NATURE);

} else if (topic == "Entertainment") {

article->add_topic(tutorial::Article::ENTERTAINMENT);

} else if (topic == "Politics") {

article->add_topic(tutorial::Article::POLITICS);

}

}

}

void ListOfArticle(const tutorial::ArticleCollection& articleCollection) {

for (int i = 0; i < articleCollection.article_size(); ++i) {

const tutorial::Article& article = articleCollection.article(i);

cout << "Article ID: " << article.id() << endl;

cout << " Title: " << article.title() << endl;

cout << " Snippet: " << article.snippet() << endl;

cout << " Content: " << article.content() << endl;

cout << " Featured? " << (article.isfeatured() ? "yes" : "no") << endl;

cout << " Author id: " << article.author().id() << endl;

cout << " Author name: " << article.author().name() << endl;

cout << " Author email: " << article.author().email() << endl;

cout << " Topics: ";

for (int j = 0; j < article.topic_size(); ++j) {

tutorial::Article::Topic topic = article.topic(j);

switch(topic) {

case tutorial::Article::SCIENCE:

cout << "Science ";

break;

case tutorial::Article::TECHNOLOGY:

cout << "Technology ";

break;

case tutorial::Article::NATURE:

cout << "Nature ";

break;

case tutorial::Article::ENTERTAINMENT:

cout << "Entertainment ";

break;

case tutorial::Article::POLITICS:

cout << "Politics ";

break;

default:

continue;

}

}

cout << endl;

}

}

int main(int argc, char* argv[]) {

GOOGLE_PROTOBUF_VERIFY_VERSION;

if (argc != 2) {

cerr << "Usage: " << argv[0] << " Article_Collection_File" << endl;

return -1;

}

tutorial::ArticleCollection articleCollection;

{

// Read existing article collection file if it exists

ifstream input(argv[1], ios::in | ios::binary);

if (!input) {

cout << argv[1] << ": File not found. Creating a new file." << endl;

} else if (!articleCollection.ParseFromIstream(&input)) {

cerr << "Failed to parse article collection file." << endl;

}

}

// add an article, modify original data

PromptForArticle(articleCollection.add_article());

{ // Write data back to disk

ofstream output(argv[1], ios::out | ios::trunc | ios::binary);

if (!articleCollection.SerializeToOstream(&output)) {

cerr << "Failed to write article collection file." << endl;

return -1;

}

}

ListOfArticle(articleCollection);

// Optional: Delete all global objects allocated by libprotobuf.

google::protobuf::ShutdownProtobufLibrary();

return 0;

}

ParseFromIstream reads information from an input stream to deserialize data, SerializeToOstream serializes the data and then writes to output stream. To see the complete project, you can visit this github repository.

This piece of example program is very simple, but if we carefully modify it, then we can turn it into a more useful program. E.g. replace disk to network socket, then we can implement a data exchange task over a network for a remote procedure call (RPC) system. And data persist storage, data exchange over network are the most important application area for protobuf.

A more complicated example

After going through the first example, hopefully you will get a basic understanding of protobuf. Now comes the interesting part, we will go through a real web app that utilizes protobuf to exchange data between client and server.

The web app has a react frontend and a python backend web service. The frontend is simply requesting & displaying data, the backend service will first generate some random data when it boots up and send them when client requests.

Let’s start with the dummy web service, in the demo-app/server folder of the repository, there are 3 files, app.py, data.py, article_collection_pb2.py, as you can guess, article_collection_pb2.py is generated by the protobuf compiler. The data.py is the file using the generated proto classes, you can see the import statement at the top import article_collection_pb2, this file is mainly responsible for generating random data when server boots up, so the data can be served for requests from clients later. There is nothing fancy in the code, most of the code is just constructing data, the most important part of code is probably the one that convert python object to corresponding protobuf message representation, shown as follows:

...

for article in articles:

proto_articles.append(article_collection_pb2.Article(

id = article["id"],

title = article["title"],

snippet = article["snippet"],

content = article["content"],

isFeatured = article["isFeatured"],

topics = article["topics"],

author = article["author"]

))

...

The app.py is the place that starts the server, it uses the Flask package to handle the heavy tasks for creating a server.

To start the web service server, first make sure all the python packages are installed, run the following command in your terminal pip install -r requirements.txt, after all the packages are installed, type python app.py to start the server.

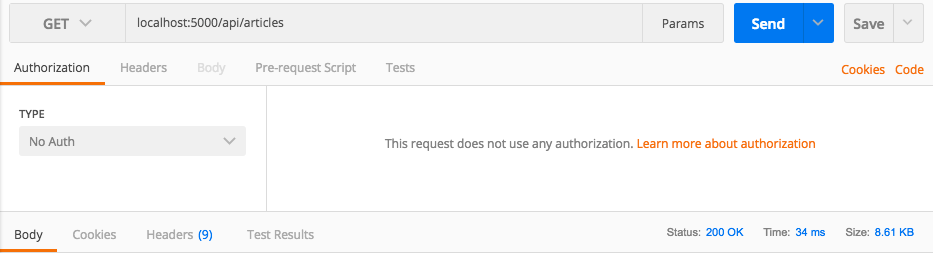

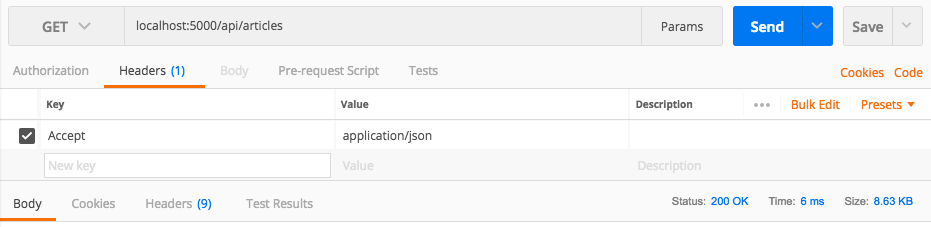

Then we can use postman (desktop version) to test our api endpoint. The following 2 figures demonstrates the difference between the requests for json version data and the binary version (protobuf) data:

Figure 1. Request for data (Protobuf)

Figure 2. Request for data (JSON)

As you can see, the data size transmitted by using protobuf message is smaller than using JSON, the difference is relatively small, since the demo app is not a large scale program, but as you can image, once the program is scaled to a very large level, the data size being transmitted is going to increase dramatically, then the saving for data size is going to be obvious.

In order to decode the binary data encoded in the backend by protobuf. The frontend app uses a javascript library called protobuf.js which is a javascript implementation of protobuf. By using this library, we can just give our .proto file to it, and it will handle all the heavy work of file compiling and decoding/encoding of message for us. It’s easy to use and mostly blazingly fast.

In the app.js file under demo-app/client/src/ folder, pay attentation to loadMessageDef() and loadArticles() these two methods where the actual magic happens.

...

import protobuf from "protobufjs";

...

async loadMessageDef() {

try {

// load protobuf messages definitions

const root = await protobuf.load("article_collection.proto");

// obtain message type

this.ArticleCollection = root.lookupType("tutorial.ArticleCollection");

} catch (err) {

console.error(err);

}

}

async loadArticles() {

try {

const response = await axios.get("http://localhost:5000/api/articles", {

responseType: "arraybuffer"

});

// decode encoded data received from server

const articleCollection = this.ArticleCollection.decode(new Uint8Array(response.data));

this.setState({articles: articleCollection.articles});

} catch (err) {

console.error(err);

}

}

...

As you can see, protobuf.js make data handling a lot easier for us. To start the client app, go to demo-app/client/ folder and type npm start in the terminal. Then you can start interaction in the browser.

Comparison with other technologies

Hopefully you have a more in-depth knowledge for protobuf up until now. Some people will probably ask why not using other existing technologies like XML, JOSN etc. So what’s the difference?

A simple answer: protobuf is simple and fast.

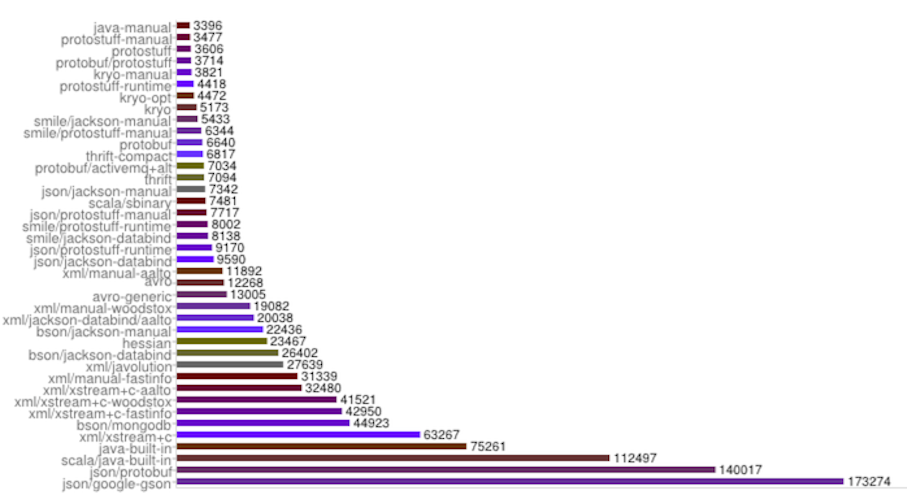

To prove this, the project thrift-protobuf-compare compared these technologies, figure 1 displays the test result, the bar represents the total time for manipulating an object including creating object, serializing object and then deserializing it.

Figure 3. Performance test results [2]

From the test result we can see that protobuf performed very well. If you are interested, you can visit thrift-protobuf-compare project website for more detail.

Advantage of protobuf

As stated at the beginning of blog, protobuf is smaller, faster and simpler compared to XML. You have full control over your structured data definition, you only need to define your message once by protobuf, then you can use a variety of languages to read and write your message data from different data streams. Also you can even update your message definition without redeploying your program, since protobuf supports backward backwards-compatiblity.

And protobuf syntax is more clear, and doesn’t require a interpreter like XML. This is because protobuf compiler will compile the message definition into corresponding data access classes in order to do serialization/deserialization.

Disadvantage of protobuf

Even though protobuf has many advantages over XML, it still cannot completely replace XML. protobuf cannot represent complex concept. It’s currently mainly used by Google and lack of popularity.

Also, protobuf cannot be used to model text-based document with markup. XML itself is self-describing, while protobuf message will only make sense if the message definition is presented. [1]

And it also adds a little bit of development effort compared to just using JSON, as can be seen from the above example.

At last

Hopefully after reading this introductory article, you will learn a brand new serialization/deserialization technology and add it to your toolbox. Whether or not to use it in your project would really depend on the situation, if you project cares about performance, speed and data size, protobuf might be a good candidate, especially for a RPC system. But for a regular web app, I think JSON is probably enough.

References

[1] Protol Buffers, https://developers.google.com/protocol-buffers/docs/overview

[2] Thrift Protobuf Compare, http://code.google.com/p/thrift-protobuf-compare/wiki/Benchmarking